Background

While the machine used computer vision to detect joints, inevitably some joints would be mis-identified - the question was whether it would be more productive to catch the errors from the algorithm, or fix the mistakes after-the-fact. Our Operator Experience Team wanted to see what our tapers might think about the tradeoff between agency and productivity.

Agency Vs Productivity

On the one hand, a completely autonomous system, while faulty at first, would eventually become robust and reliable - the technology could only get better. And the thought was, whether the baseline level of proficiency would provide enough of a perceived benefit to outweigh the short-term deficiency. In other words, if operators think the machine is doing well when it makes mistakes now, then they will only like it more as it improves.

At the core, it’s a case for whether the company was to invest in full autonomy (for this feature) or design a system that kept the human in the loop.

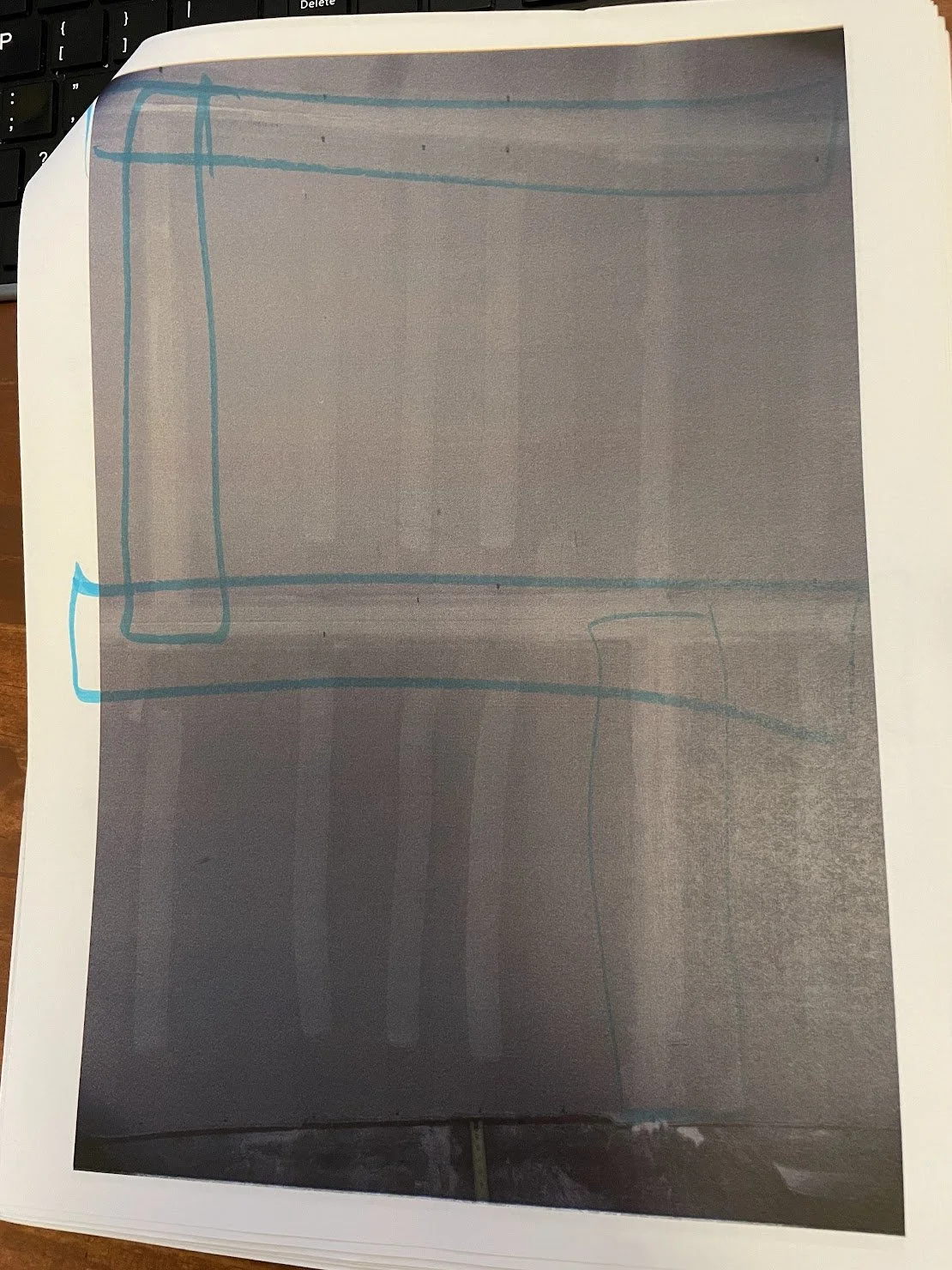

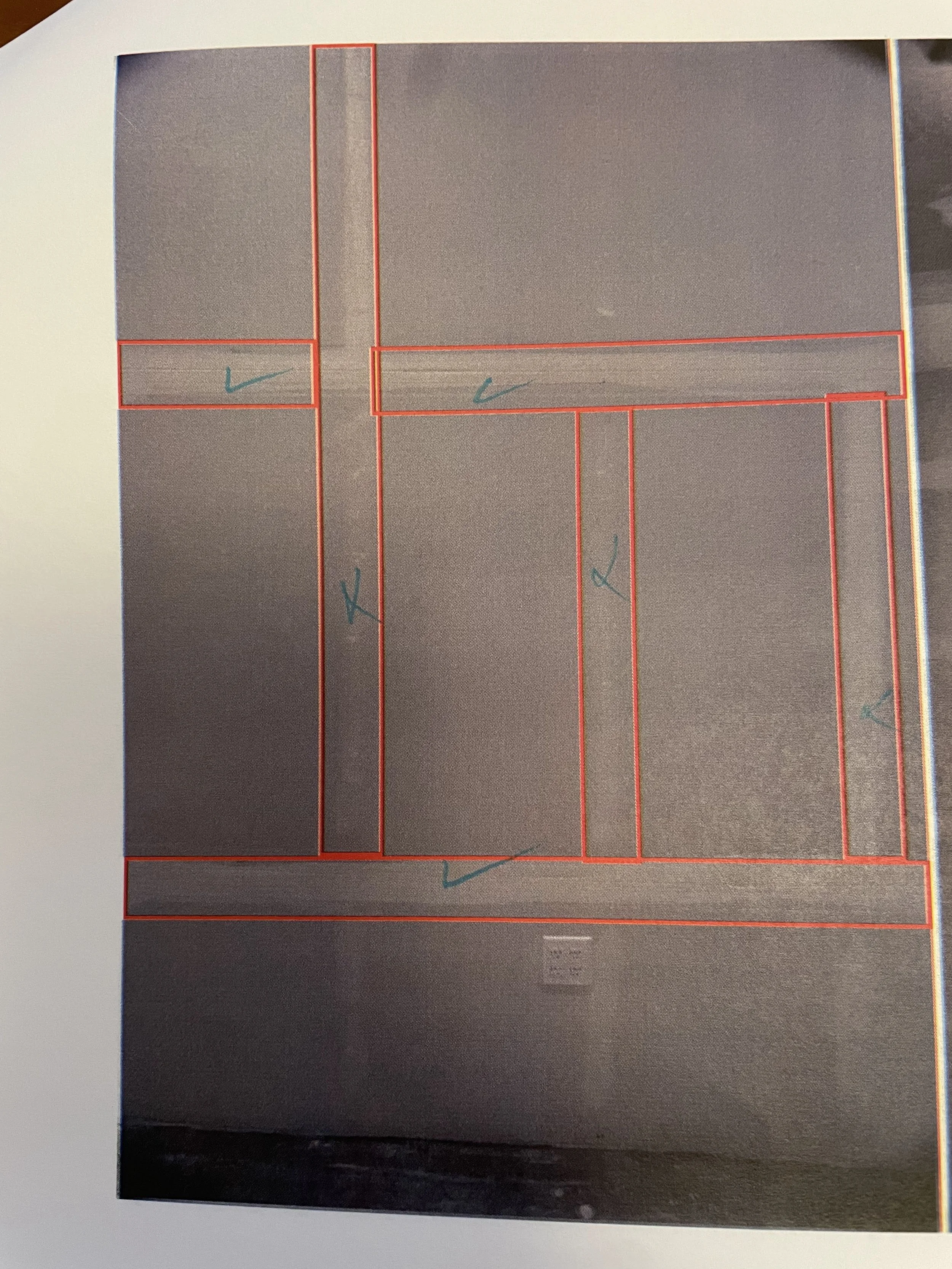

Targeted Spray User testing - the paper Prototype

To address the question of taking the human out of the loop, we decided to give our users the two scenarios - one with total oversight over a joint detection system. To mitigate potential struggles with digital UI design, we had users go through printed images with pen, and had them go through two exercises - first, to identify all the joints in a set of images, and second, to confirm the accuracy of joints in the images. I then interviewed the participants to see how they perceived the two tasks.

Findings - Expertise, Agency, and Reliability

The interviews proved to be elucidating. We initially predicted that the participants might grow tired of selecting each joint individually, for every image. We discovered that in actuality, our participants preferred being able to draw their own boxes - not only was the act of drawing boxes negligible in terms of effort, but also, participants felt more empowered to rely on their own knowledge and intuition when making their selections. Our users prided themselves on their intuition, knowledge, and experience, and enjoyed the freedom to flex that strength.

Additionally, in the second exercise, participants perceived the “failed” detections to indicate a more critical system failure. They shared that they might think the machine was broken, or in need of service. Not only did people prefer the “entirely manual” system, but also, routine computer vision failures had the potential to hurt the perceived the reliability of the system.

Research Impact

In presenting these findings, my team and I recommended that the company devote time and design resources to creating a seamless UI to allow users to quickly identify and denote drywall joints. These findings unlocked resources for prototype development and further usability testing (see Part 2), and as a result of these efforts, Canvas has released a drywall joint-picker that has consistently remained one of the easiest and learnable parts of the new system.